Edited by Brian Birnbaum and an update of my original Tesla deep dive.

Tesla’s cash production is set to non-linearly increase from here, sending the stock up big time. Just like in 2020.

Per the CFO’s remarks in the Q3 earnings call, Tesla’s ASPs (average selling prices) have been declining this year. Despite this, free cash flow over operating margin is up 2.25X YoY, indicating notably increased efficiency. Since the company is still a hardware company for the time being, Tesla’s metrics fluctuate quarterly. Therefore any assessment of its ability to compound its manufacturing, energy and AI capabilities requires a long-term view. As we approach this next inflection point, Tesla’s cash flow production is set to explode over the coming year or two, with the stock broadly following suit.

Free cash flow divided by operating margin expresses how much free cash flow Tesla prints per unit of operating margin. Ideally shareholders want to see this rise as the denominator falls. Lower operating margins make it harder for competitors to replicate the operation profitably, while higher lFCF/op. margin increase overall value. As Jeff Bezos once said, investors can’t spend margin percentages, but they can spend free cash flow. Lower margins over time with an increased ability to produce cash is the hallmark of companies that share economies of scale with customers–arguably capitalism’s most powerful feature.

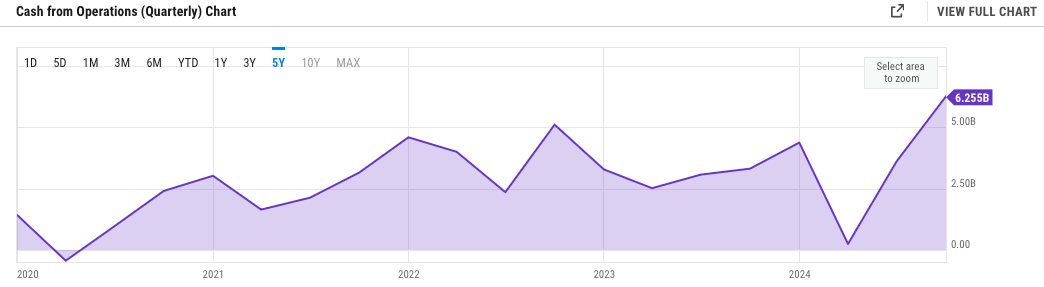

The graph below depicts Tesla’s cash from operations hitting a low in Q1 2024 before inflecting upward. The decline started in Q3 2022, after the Fed began raising interest rates to bring inflation under control. The graph below thus summarizes Tesla’s journey to increase efficiency to offset the higher cost of money.

They have emerged victorious. In Q3 2024 Tesla printed $6.25B in cash from operations–an all-time quarterly high. If the Fed continues decreasing rates, incremental yields in efficiency will effect non-linear increases in cash production.

However, the real source of alpha lies deep in the fundamentals.

Going forward Tesla does not need interest rate decreases to enhance FCF. Declining interest rates is merely a bonus element to the asymmetrical thesis. FCF/operating margin is at its highest since the Q4 2020 peak, which sent the stock up tenfold in a matter of months, the result of Tesla's efforts to revamp its manufacturing efficiency in order to bring the Model 3 live. However, Tesla delivered an increase in FCF/op. Margin during Q3-Q4 2023 despite exponentially higher cost of money, in many ways a more impressive feat compared to 2020’s backdrop of essentially 0% interest rates.

What this means is that Tesla’s latest efficiency cycle is much more powerful than that of 2020. We can expect a proportional jump in the coming year or two, holding all external factors relatively stable. In turn, I believe Tesla’s newly emerging business lines will amplify this trend. Tesla is now not only a car company, but also an AI and energy company. Incremental efficiency yields will likely manifest as increased scale and margins in these two businesses over the coming years at a pace the market has failed to price in.

The left hand side of the graph below illustrates how gross profit from energy/deployments and services continues to rise non-linearly. I believe efficiency increments are the main driver of the energy business. On the right hand side we see cumulative miles driven with FSD rising exponentially in wake of an early 2024 inflection point. And below, we see how AI training capacity continues growing exponentially from the levels in late 2023. These businesses have been in development for a long time, and my sense is that they are now a year or so away from meaningful profit contribution.

Progress is perhaps least visible from a financial accounting point of view on the AI side. But Tesla’s autopilot and AI lead Ashok Elluswamy revealed some valuable qualitative insights during the Q3 earnings call, which illustrate how FSD is perhaps just one or two versions away from redefining transportation:

Yeah. Miles between critical interventions, yep, like you mentioned, Elon, we already made a 100x improvement with 12.5 from starting of this year. And then with v13 release, we expect to be a 1000x from the beginning - from January of this year on production [Indiscernible] software.

And this came in because technology improvements going to end-to-end, having higher frame rate, partly also helped by hardware force, more capabilities, so on. And we hope that we continue to scale the neural network, the data, the training compute, et cetera. By Q2 next year, we should cross over the average human miles per critical intervention, call it collision in that case.

My impression is that Tesla’s AI is now advancing at such a rate that, within the next year or two, FSD capability will surpass humans’ across the board. In Q3 Tesla published a safety report showing one accident per seven million miles of autopilot; this compares to an average of one crash per every 700,000 miles for American drivers. In the Q3 earnings call management also explicitly stated that they expect to launch autonomous ride-hailing in California and Texas next year, with the regulatory process expected to be far easier in the latter. Qualitatively, the FSD videos on Youtube are impressive, with the cars displaying uncanny autonomy.

Meanwhile, Tesla is already offering ride hailing capability for Tesla employees in the Bay Area, although for now the cars include a safety driver. It was especially intriguing to learn that Tesla has been working on frictionless personalization, enabling any Tesla to look exactly like yours as soon as you get in, synchronizing seat and mirror positions, media, navigation, and more. This is a great value proposition for riders and clearly gives Tesla an edge in the upcoming battle against Uber. While I believe disrupting Uber will be harder for Tesla than most believe, this kind of innovation definitely gives the latter an edge. You can learn more about how Uber is preparing to stand its ground in the below post:

Lastly, it also caught my eye that Tesla’s transitioning towards a single stack for FSD V12.5, so that both city and highway driving come from the same piece of software. In my latest Meta update, I highlight how Meta shifted in Q2 2024 towards a unified AI model for all of its video players across its Family of Apps. Meta experienced an 8-10% increase in Reels watch time by transitioning towards a single model and, thus, the aforementioned shift is expected to yield watch time gains across the board. What’s interesting is that both companies are moving in the direction of unified stacks, which points to monolithic neural net architectures somehow yielding more value than disaggregated architectures.

Towards the end of the Q&A section, it was also interesting to see Elon reference the AI at the Edge problem, which I believe AMD is ideally suited to solve, because it’s the only semiconductor company that can provide adaptive computing at a marginal cost. Tesla’s cars are trained with tremendous contextual data. Further, the car has to run AI inferences using a small compute engine. This problem is likely to present itself everywhere across the economy, as AI becomes more prevalent on Main Street. As I highlight in my recent AMD update, SpaceX’s next generation satellites are powered by AMD’s Versal AI chip, which essentially reconfigures itself on-the-go, effectively changing its shape to optimize inferences at the circuit level.

Elon refers to solving AI at the Edge by increased training of neural nets. But as the volume of data rises over time, compute constraints at the edge become insurmountable via non-adaptive engines. Here’s how Elon implicitly pointed to AI at the Edge during the Q3 earnings call:

So that, like, the you've got, case of Tesla 7 or 8 cameras, that, 9 up to 9 if you include the internal camera that that that so you got gigabytes of context, and that that is then distilled down into a small number of control outputs. Whereas it's like you don't really it's very rare to have in fact, I'm not sure any LLM out there who can do gigabytes of context.

And then you've got to then process that in the car with a very small amount of compute power. So, it's all doable and it's happening, but it is a different problem than what, say, a Gemini or OpenAI is doing.And now part of the way you can make up for the fact that the inference computer is quite small is by spending a lot of effort on training.

And just like a human, like, you the more you train on something, the less mental workload it takes when you try to -- when you do it, like when the first time like a driving it absolves your whole mind.

In all, I am happy to remain long Tesla. While the energy and AI businesses are only starting to inflect, Tesla’s efficiency gains are tangible and are a leading indicator of an enhanced ability to produce cash. Much like the 2020-2021 period, I believe increased efficiency will send the stock soaring once again, this time accentuated by the emerging energy and AI businesses. While I’m not sure of the exact timing–no one can be–I believe that, holding circumstances at a relative constant, the coming year or two will be a fun time for Tesla shareholders.

Until next time!

⚡ If you enjoyed the post, please feel free to share with friends, drop a like and leave me a comment.

You can also reach me at:

Twitter: @alc2022

LinkedIn: antoniolinaresc